Designing CNNs for Secure Inference

CVPR Tutorial

Vishnu Boddeti

June 19, 2023

VishnuBoddeti

Machine Learning as a Service (MLaaS)

Fully Homomorphic Encryption (FHE)

Representation over Cyclotomic Polynomial Rings

- Message $\bm{m}\in \mathbb{C}^{\frac{N}{2}}$ Plaintext $\mu \in \mathcal{R}_{Q_\ell} = \mathbb{Z}_{Q_\ell}[X]/(X^N+1)$ Ciphertext $\bm{c}=(c_0, c_1) \in \mathcal{R}_{Q_\ell}^2$ Level $0\leq l\leq L$, multiplication consumes one level $l-1$. Bootstrapping can refresh $0$-level ciphtertext.

FHE Primitives

- Generate Keys:

- secret key: $s\gets\mathcal{R}_{Q_\ell}$

- public key: $\bm{p}=(-as+e,a)$, $a,e\gets\mathcal{R}_{Q_\ell}$

- Encryption: $\bm{c}=(\mu, 0)+\bm{p}=(\mu-as+e,a)=(c_0,c_1)$

- Decryption: $c_0+c_1s=\mu-as+e+as=\mu+e\approx \mu$

Homomorphic Operations

- Addition: $\bm{c} \oplus \bm{c}^\prime \cong \bm{m} + \bm{m}^\prime$

- Multiplication: $\bm{c}\otimes \bm{c}^\prime \cong \bm{m} \odot \bm{m}^\prime$

- Rotation: $\mathrm{Rot}(\bm{c}, l) \cong \mathrm{Rot}(\bm{m}, l)$

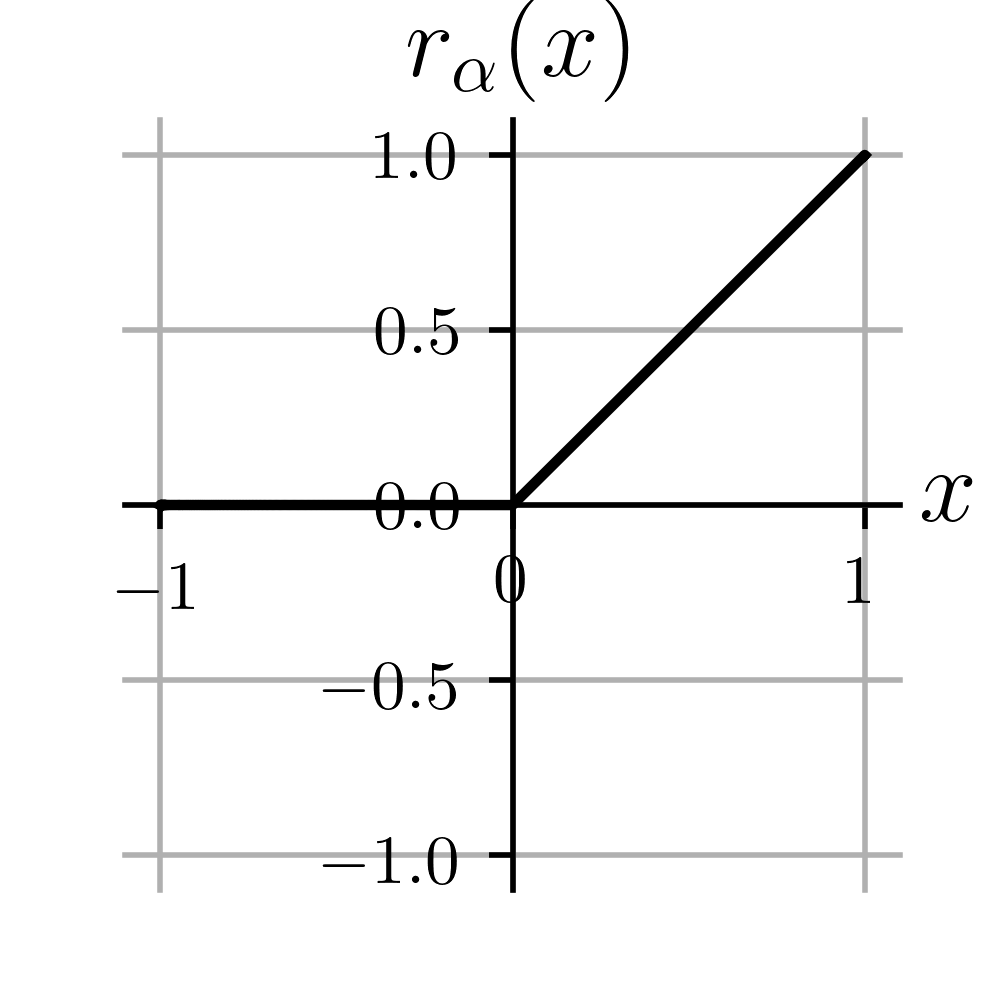

Activation Functions in FHE

Non-linear activation functions, like ReLU, are not supported on FHE.

Polynomial Evaluation under FHE

Complexity of FHE Operations

Primary Bottleneck: Bootstrapping

- Evaluating a deep neural network necessitates bootstrapping

- Bootstrapping dominates latency ($\mathbf{>70\%}$ of execution time)

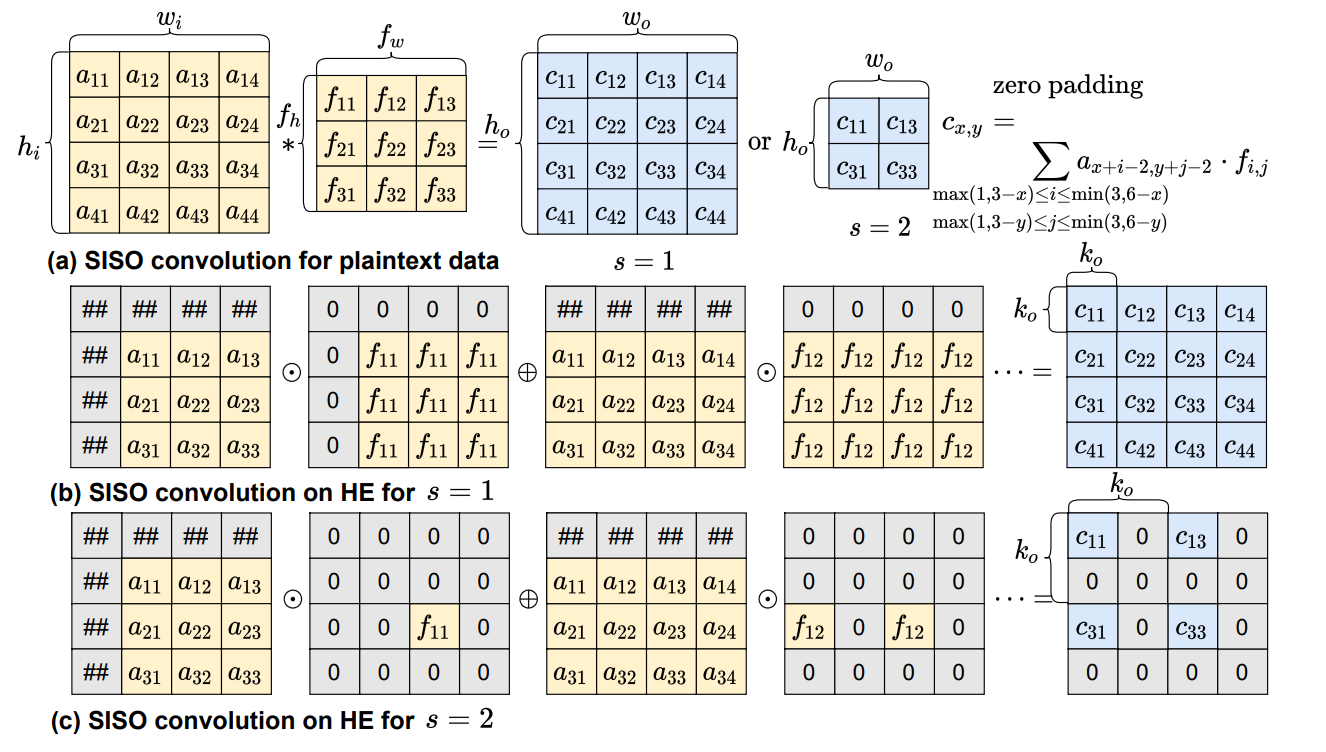

Convolution under FHE

- Low-Complexity Convolutional Neural Networks on Fully Homomorphic Encryption Using Multiplexed Parallel Convolutions, ICML 2022

Convolution under FHE

Polynomial Approximation of ReLU

- Low-Degree: Levels $\Downarrow$, precision $\Downarrow$

- Faster CryptoNets: Leveraging Sparsity for Real-World Encrypted Inference

- High-Degree: Levels $\Uparrow$, precision $\Uparrow$

- Precise Approximation of Convolutional Neural Networks for Homomorphically Encrypted Data

CNNs under FHE

| Operations | Support |

|---|---|

| Conv2D | ✓ |

| BatchNorm2D | ✓ |

| AvgPool | ✓ |

| Linear | ✓ |

| ReLU | ❌ |

| Bootstrapping | ✓ |

Polynomial Approximation of ReLU

- Level consumption/multiplication Depth = $\log_2\lceil\text{degree}\rceil$

- Degree $\mapsto$ Level consumption $\Uparrow$ $\mapsto$ Bootstrapping $\Uparrow$ $\mapsto$ Latency $\Uparrow\Uparrow\Uparrow$

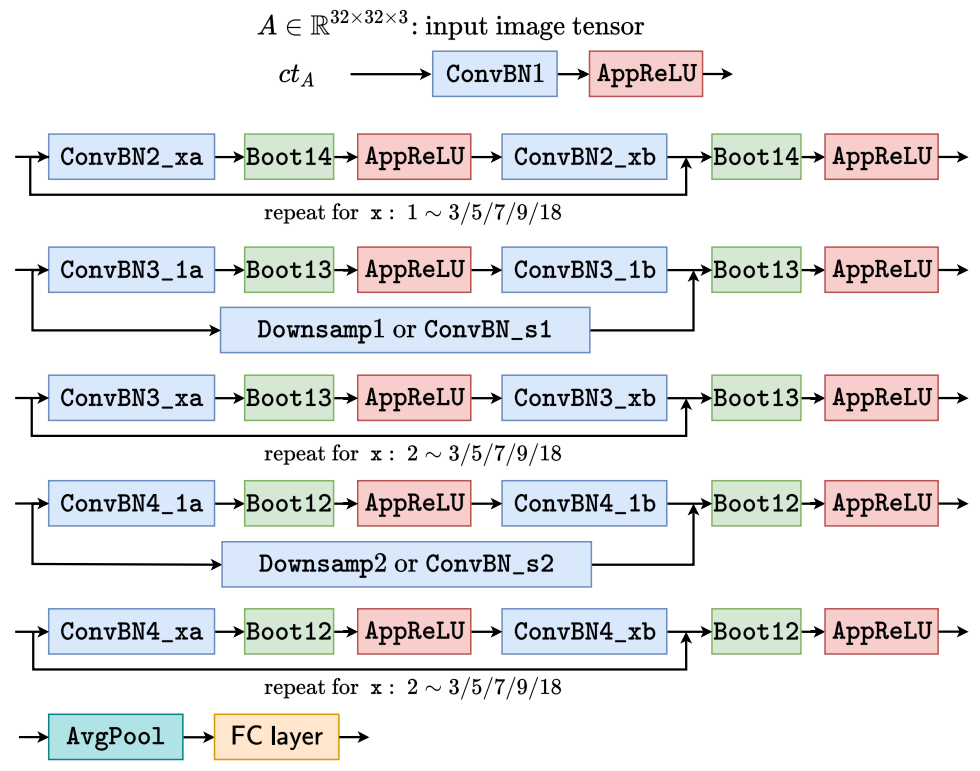

FHE-MP-CNN: a High-degree Polynomial Solution

- Homomorphic evaluation architecture of ResNets

- Experimental results on CIFAR datasets

- High-degree polynomials $\rightarrow$ Levels $\Uparrow \rightarrow$ Bootstrapping $\Uparrow \rightarrow$ Latency $\Uparrow$

- High-degree polynomials $\rightarrow$ Approximation Precision $\Uparrow \rightarrow$ Accuracy $\Uparrow$

- Low-Complexity Convolutional Neural Networks on Fully Homomorphic Encryption Using Multiplexed Parallel Convolutions, ICML 2022

Multi-Objective Conflict

Conflict between Latency and Accuracy

- Latency

- Low-degree Polynomials $\rightarrow$ Level Consumption $\Downarrow \rightarrow$ Bootstrapping $\Downarrow \rightarrow$ Latency $\Downarrow$

- Accuracy

- High-degree Polynomials $\rightarrow$ Approximation Precision $\Uparrow \rightarrow$ Accuracy $\Uparrow$

Layerwise Polynomials

- Layerwise mixed-degree polynomial networks leverage layerwise approximation sensitivity to accelerate inference while preserving accuracy.

Learn Polynomials and Networks

- Leverage both polynomial approximation of ReLU and learn neural network weights using gradient descent.

Bi-Level Multi-Objective Framework

Framework Overview

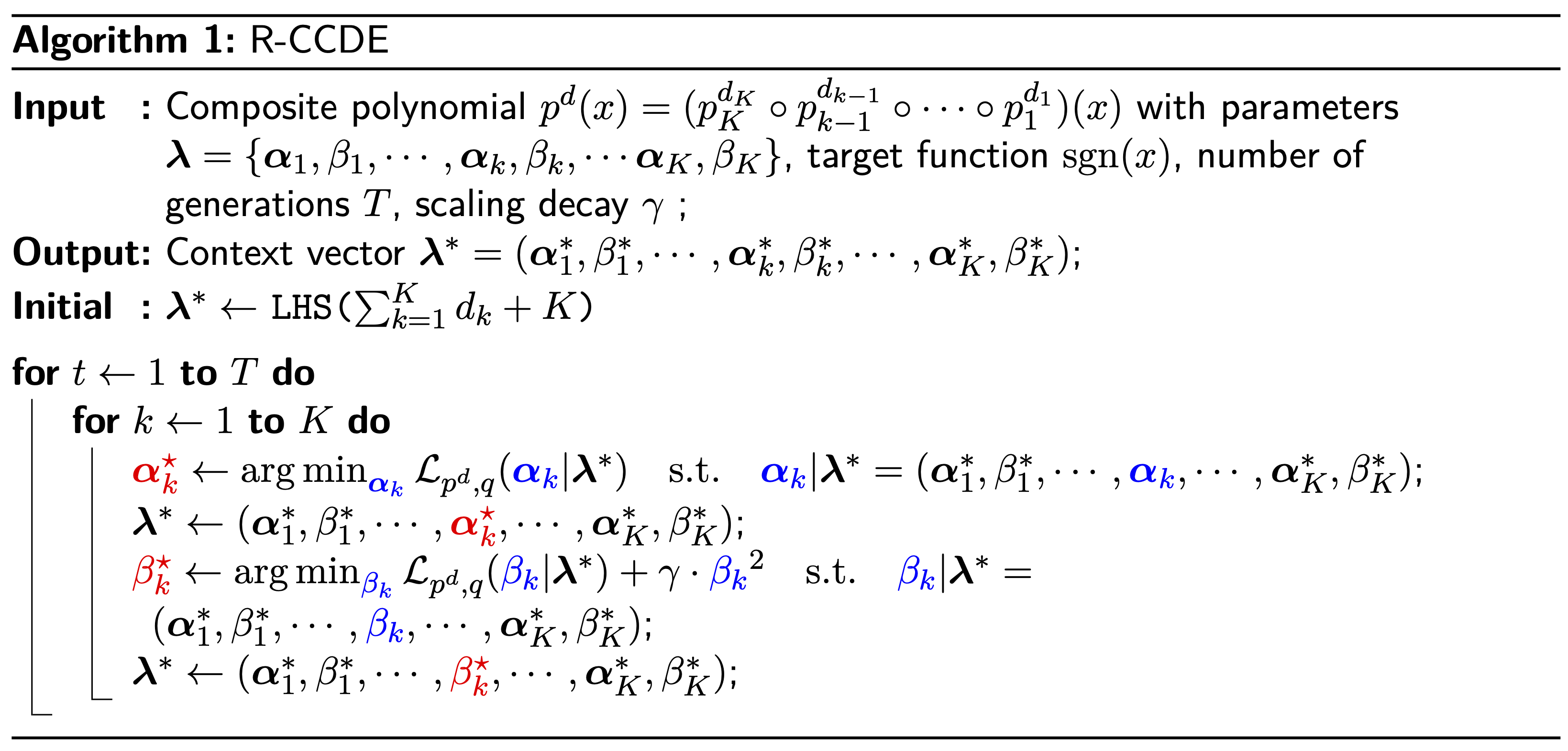

Full Search Algorithm

- MOCoEv: Multi-Objective CoEvolutionary algorithm searches for layerwise polynomials

- R-CCDE: Regularized Cooperative Coevolution Differentiable Evolution optimizes coefficients

- PAT: Polynomial-Aware Training can avoid gradient exploding due to polynomials

Flexible Homomorphic Encryption Architecture

Evolving Composite Polynomials

EvoReLU: a composite polynomial

- $\mathrm{EvoReLU}(x)=x\cdot(0.5+p^d(x))$ by using $\mathrm{ReLU}(x)=x\cdot(0.5+0.5\cdot\mathrm{sgn}(x))$

- Composite polynomial $p^d(x)=(p_K^{d_K}\circ\cdots\circ p_2^{d_2}\circ p_1^{d_1})(x)$

- Sub-polynomial $p_k(x)=(1/\beta_k)\sum_{i=1}^{d_k} \alpha_i T_i(x)$, $T_i(x)$ is Chebyshev basis

- Search for number of sub-polynomials $K$, degrees $\{d_k\}_{k=1}^{d_K}$ and coefficients $\{\bm{\alpha}_k, \beta_k\}_{k=1}^K$

Search Variables

| Variable | Options |

|---|---|

| # polynomials $(K)$ | 6 |

| poly degree $()d_k$ | {0,1,3,5,7} |

| coefficients $(\Lambda)$ | $\mathbb{R}$ |

Search Space

Search Space

- High-dimensional multi-objective optimization

- Extremely large search space

| Backbone | # EvoReLU | # Sub-polynomial | Search Space Size |

|---|---|---|---|

| ResNet-20 | 19 | 114 | $10^{79}$ |

| ResNet-32 | 31 | 186 | $10^{130}$ |

| ResNet-44 | 43 | 258 | $10^{180}$ |

| ResNet-56 | 55 | 330 | $10^{230}$ |

Search Objective

Search Objective

$$

\begin{aligned}

\min_{\bm D} & \quad \left\{1-\mathrm{Acc}_{val}\left(f(\bm{\omega}^\ast);\bm{D},\bm{\Lambda}(\bm{D})\right), \mathrm{Boot}(\bm D) \right\} \\

& \quad \bm{\omega}^\ast = \argmin_{\bm{\omega}} \mathcal{L}_{train} \left( f(\bm{\omega});\bm{D},\bm{\Lambda}(\bm{D}) \right)

\end{aligned}

$$

$\bm{D}$ all degrees, $\bm{\Lambda}(\bm{D})$ all coefficients, $\bm{\omega}$ network weight, $ \mathrm{Boot}(\bm D)$ #bootstrapping operations

MOCoEV

Operations

- Selection: Randomly select $N$ solutions from current trade-off front to build a mating set.

- Crossover enables network-level information exchange.

- Mutation is a coevolutionary operation. Explores the $m$-th layer with other layers fixed.

- R-CCDE optimizes polynomial coefficients.

- PAT fine-tunes new solutions $\{\bm{D}_j^\prime, \bm{\Lambda}_j^\prime\}_{j=1}^N$.

- Evaluate accuracy and count bootstrapping.

- Update trade-off front.

R-CCDE Algorithm

R-CCDE: Optimize Polynomial Coefficients

AutoFHE: Bi-Level Multi-Objective Search

Experimental Setup

- Hyperparameters: Generations 20, Population size 50, Coefficient search space $[-5, 5]$, DE population size $10\times\#$variables, Replace sub-polynomial $0.5$, remove sub-polynomial $0.4$, insert sub-polynomial $0.1$

- Time Complexity: On one NVIDIA RTX A6000 GPU, search process for ResNet-20/32/44/56 on CIFAR-10 took 59 hours, 126 hours, 200 hours, 281 hours, respectively. The search for ResNet-32 on CIFAR-100 took 140 hours.

AutoFHE: Better Trade-off

AutoFHE: Better Trade-off under RNS-CKKS FHE

Evaluation under RNS-CKKS FHE

- The inference time for 50 images is evaluated on AMD EPYC 7H12 64-core processor with 50 threads.

- AutoFHE: Automated Adaption of CNNs for Efficient Evaluation over FHE, Cryptology ePrint Archive 2023.

- FHE-MP-CNN: Low-Complexity Convolutional Neural Networks on Fully Homomorphic Encryption Using Multiplexed Parallel Convolutions, ICML 2022

AutoFHE: Layerwise Polynomials

Summary

- Neural networks need to be redesigned for operating on encrypted data.

- Manual design of architecture is difficult, sub-optimal and time-consuming.

- Multi-Objective optimization is an attractive and effective solution in practice.